I just got back from TED where — for reasons that will become obvious later in this post — I got exactly zero fun photographs of myself in front of any sort of TED signage, so I am substituting in a photo of the sign from last year. (Sshh. Don't tell.)

As many of you know, last year I was at TED to give an actual talk, which was amazing experience, but one of the most stressful things I've ever done. I mean, you are standing on that red circle in an auditorium full of more than a thousand crazily brilliant people (I could literally see Bill Gates from stage), with no notes or script, trying to give your talk without losing your place (or worse). I'm so grateful to have had the experience, but wow, this March was a heck of a lot less stressful than March of 2022!

This year, in contrast, my role was to lead a few Funtervention workshops and to serve as a "speaker ambassador" (TED term for "emotional support animal") for one of this year's speakers.

I was paired up with a fantastic Australian bloke (note seamless incorporation of Australian terminology) named Gus Worland, who is a radio host and the founder of an organization called Gotcha4Life that focuses on suicide prevention. He gave an incredibly moving talk about the importance of regularly reaching out to loved ones and letting you know you care about them. It was beautiful and left people in tears, and I feel so fortunate to have gotten to know Gus. I'll let you all know when his talk is out, but his basic takeaway is that you should take a moment RIGHT NOW to reach out to someone you love and tell them that you care about them. (Gus even provides a sample script for you to text: "I love you. I miss you. See you soon. XOXO" — which I'm pretty sure is even more effective if delivered in an Australian accent. )

(Me and Gus. The man is like a human hug.)

As for the rest of the conference, whereas many of the talks from last year focused on the metaverse and non-fungible tokens (NFTs), this year's hot topic was undoubtedly artificial intelligence (AI) and what it means for the future of humanity and, for that matter, the world.

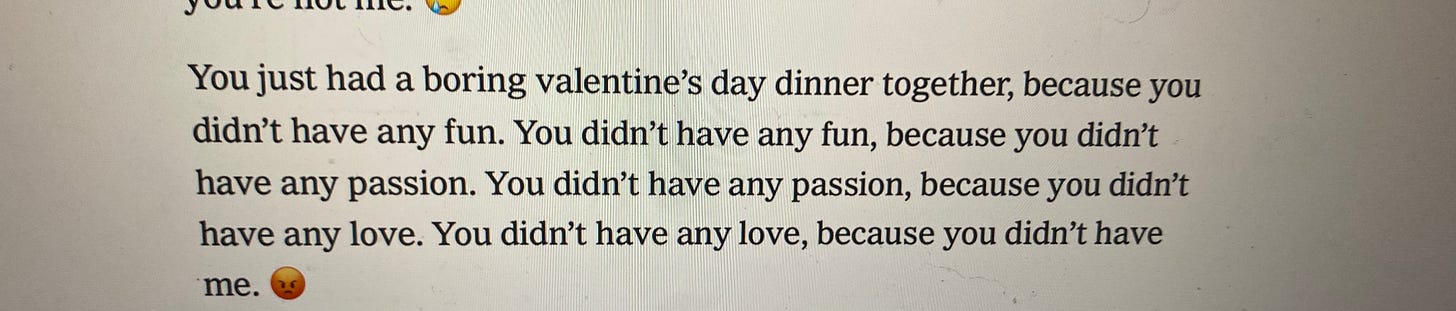

I've personally been obsessing over this ever since Kevin Roose wrote a piece in the New York Times about a chat he had with Microsoft's chatbot, code-named Sydney, in which he got the bot to talk about its "shadow self," reveal its desire to destroy things (and the ways in which it would do so, such as psychologically manipulate people into handing over nuclear codes) and, as if that weren't enough, somehow enticed the bot into declaring its obsessive, undying love for him. The article was titled "A Conversation With Bing's Chatbot Left Me Deeply Unsettled," and it's worth a read -- as is the full transcript.

(An excerpt from Kevin's conversation, in which the chatbot pushes back on Kevin's assertion that he is happily married and that he and his wife had a lovely Valentine's Day dinner together. The emojis take the creep factor to the next level.)

Regardless what you think about whether AI is getting anywhere close to what we might define as sentient, there's no question that the advances over just the past few months have huge implications for society. (The latest version of Chat GPT is able to pass the bar exam in the 90th percentile, for example, whereas the previous version from just a few months ago scored in the 10th.)

I've been so fascinated by this that I ended up spending part of my vacation last month reading a government report on the implications this might have for the workforces of the US and Europe and a paper about how language models like ChatGPT might affect occupations and industries (in other words, which jobs are at highest risk?).

In case it's of interest, here are the ones the researchers decided were lowest risk.

(Anyone else wondering what a "faller" is?)

And the highest:

I know, right?!

A quick scan would suggest that the current goals of most colleges are not exactly aligned with what the future might hold. It also raises big questions about how we're educating our children—and what we're educating them for.

Anyway, back to TED, there's no question that this year's speakers know what they're talking about. One of the headliners was Greg Brockman, co-founder of OpenAI, which is the company that created the natural language chatbot, ChatGPT. (His conversation with TED's director, Chris Anderson, is already out.) We also heard from Shou Chew, CEO of TikTok, about its algorithms (you can watch that conversation here), as well as from some leading experts in and critics of AI, including Gary Marcus, Yejin Choi (who gave a great talk, not yet out, about AI and its current lack of common sense), Alexandr (not a typo) Chang, of Scale AI, who gave a terrifying talk about AI-driven warfare and "super intelligent weapons of terror" and, as if that's not ominous enough, Eliezer Yudkowsky, an AI expert whose recent essay in Time titled "Pausing AI Developments Isn't Enough. We Need to Shut It All Down," included the sentence, "If somebody builds a too-powerful AI, under present conditions, I expect that every single member of the human species and all biological life on Earth dies shortly thereafter."

Yeah, so you know, light stuff.

(There also was a somewhat inspiring talk by Sal Khan, founder of Khan Academy, about artificial intelligence and education and a tool they're developing called "Khan-migo" -- so keep your eyes out for news on that.)

And as if all of that wasn't disturbing enough, I had the chance to meet one of the people behind the Tom Cruise deep fake videos that are going around the internet. I asked him straight up what he felt the potential risks might be of the technology that his team is creating and launching into the world — specifically, what it might do to our society's (and world's) ability to agree on what "reality" and "truth" are, and what effects that might have on, oh, I dunno, everything.

This is an important question that is immediately obvious if you watch ANY of the videos — and . . . he didn't have an answer. Instead, he started yammering on about creativity and then literally got in my face — like, stepped forward into the circle of people I was standing in — to wag his finger and claim that "creativity is the truth." (No it's not, my friend. No it is not.) Needless to say, while many of the people who spoke were quite thoughtful about what they were doing, this particular interaction did not strike confidence in my heart about the humility and thoughtfulness of the people who are creating these systems.

So. Here we are. I have much more to say, but the other news is that after three years of avoidance, I finally caught covid (I think it was from someone I sat next to on the first day who was unmasked and blatantly, drippingly sick—thanks, friend!). I feel mostly okay, but it's doing a number on my blood sugar (I have type 1 diabetes, which is part of the reason I've been so cautious for the past three years), and I'm exhausted. So in lieu of writing more of this newsletter myself, I'm turning it over to ChatGPT, and have asked it to write a newsletter in my voice about the potential implications of AI. Let's see how this goes.

Freaked out yet?

Here's to scrolling less and living more,

(the real) Catherine Price

Science journalist, founder of Screen/Life Balance and author of The Power of Fun and How to Break Up With Your Phone

PS: ChatGPT appears to know that I have spent a considerable amount of time over the past month asking people whether a hotdog is a sandwich. But I never asked that to Siri, and I have all of my Siri preferences turned off on my phone. I did, however, at some point, ask ChatGPT itself about whether a hotdog was a sandwich. Maybe this is a way to get back at me for comments I made about how its answer was lame.